0.Preface

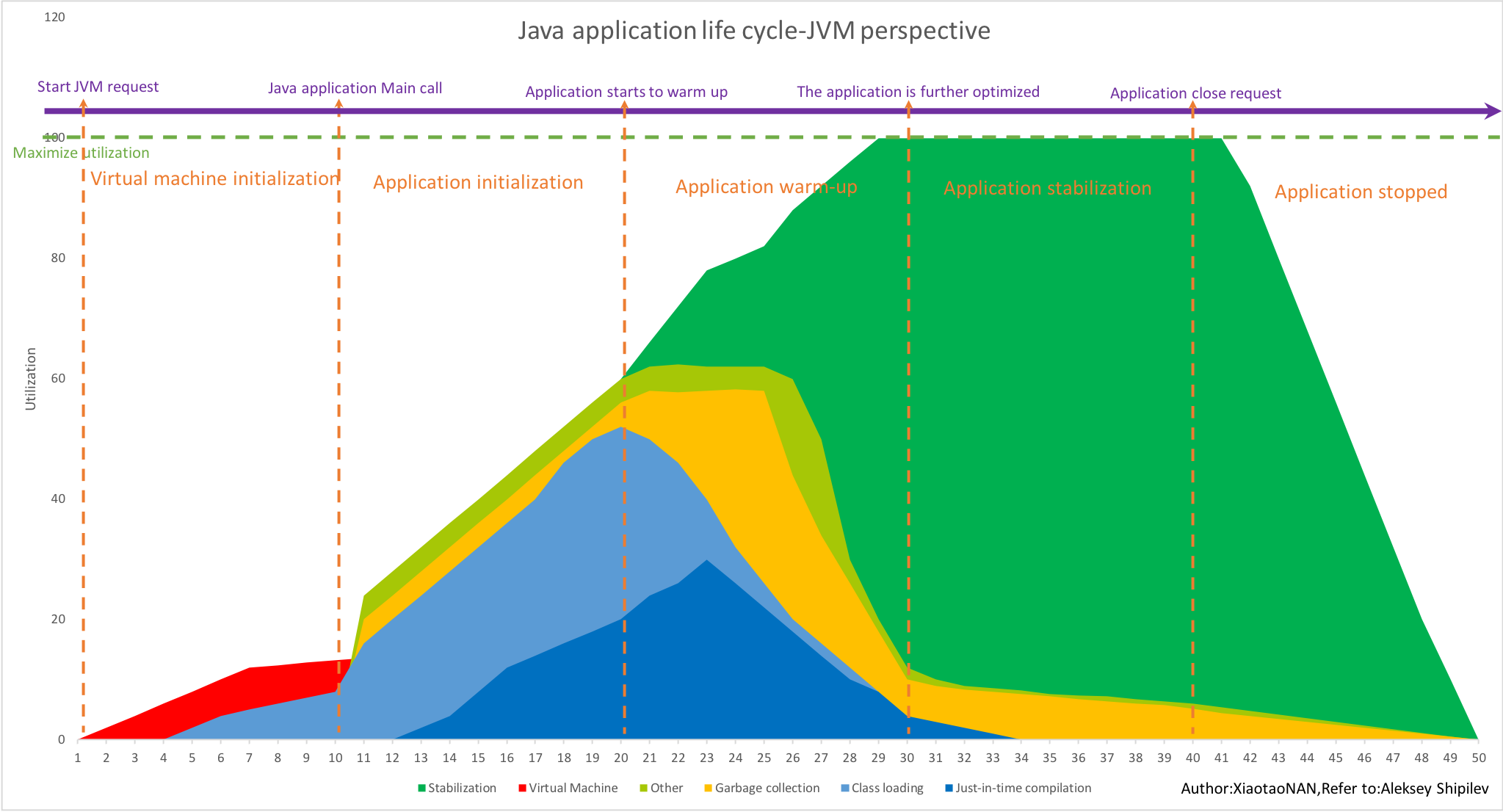

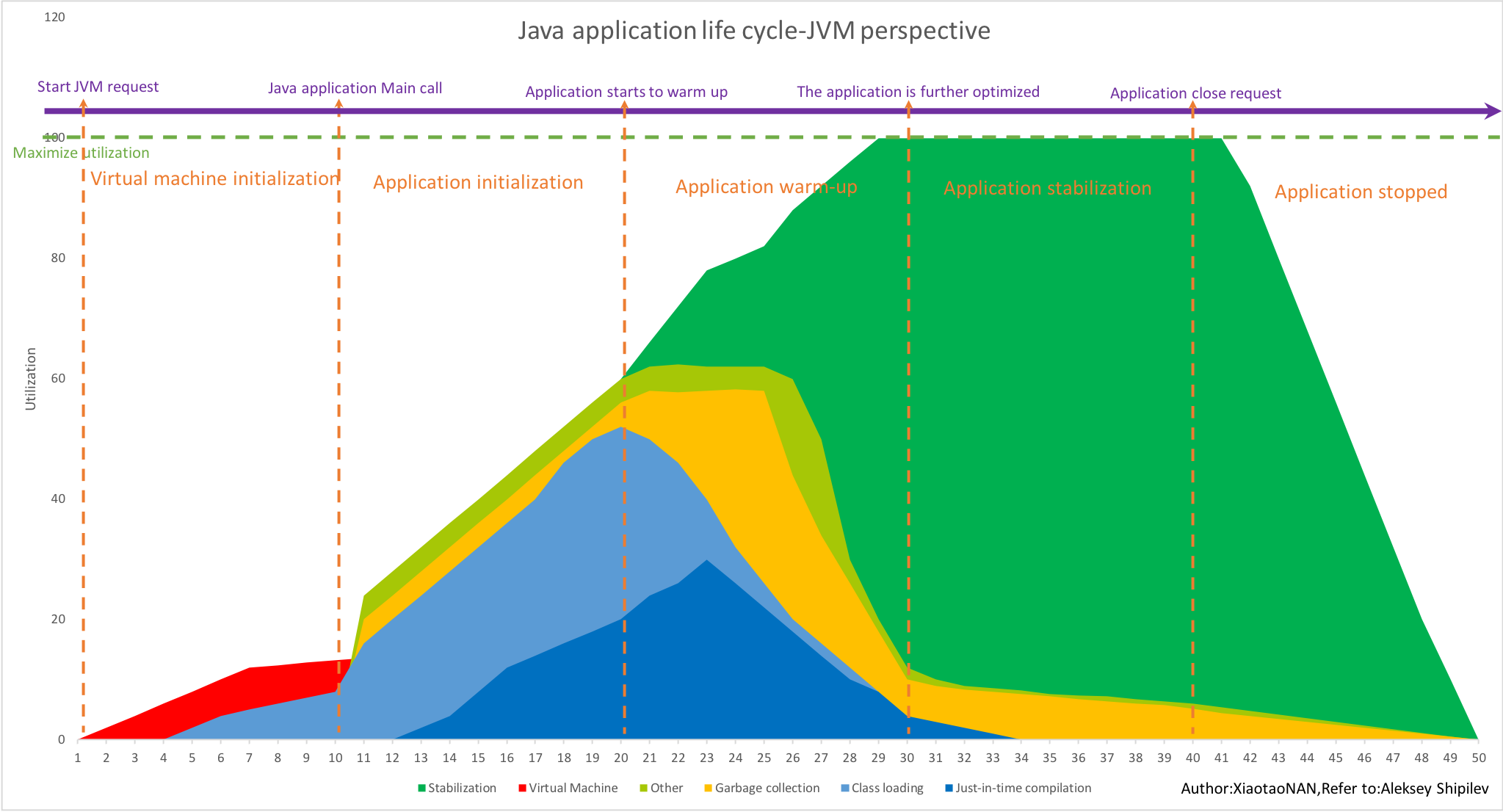

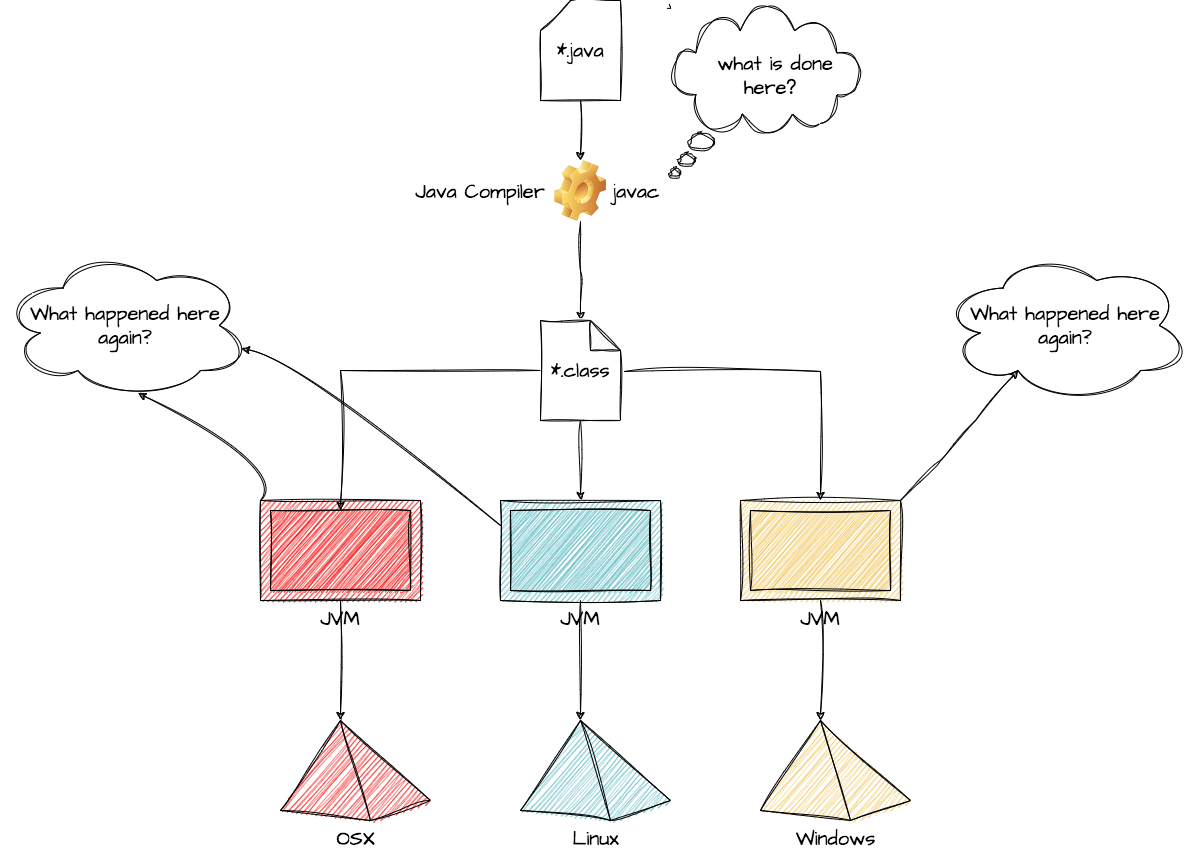

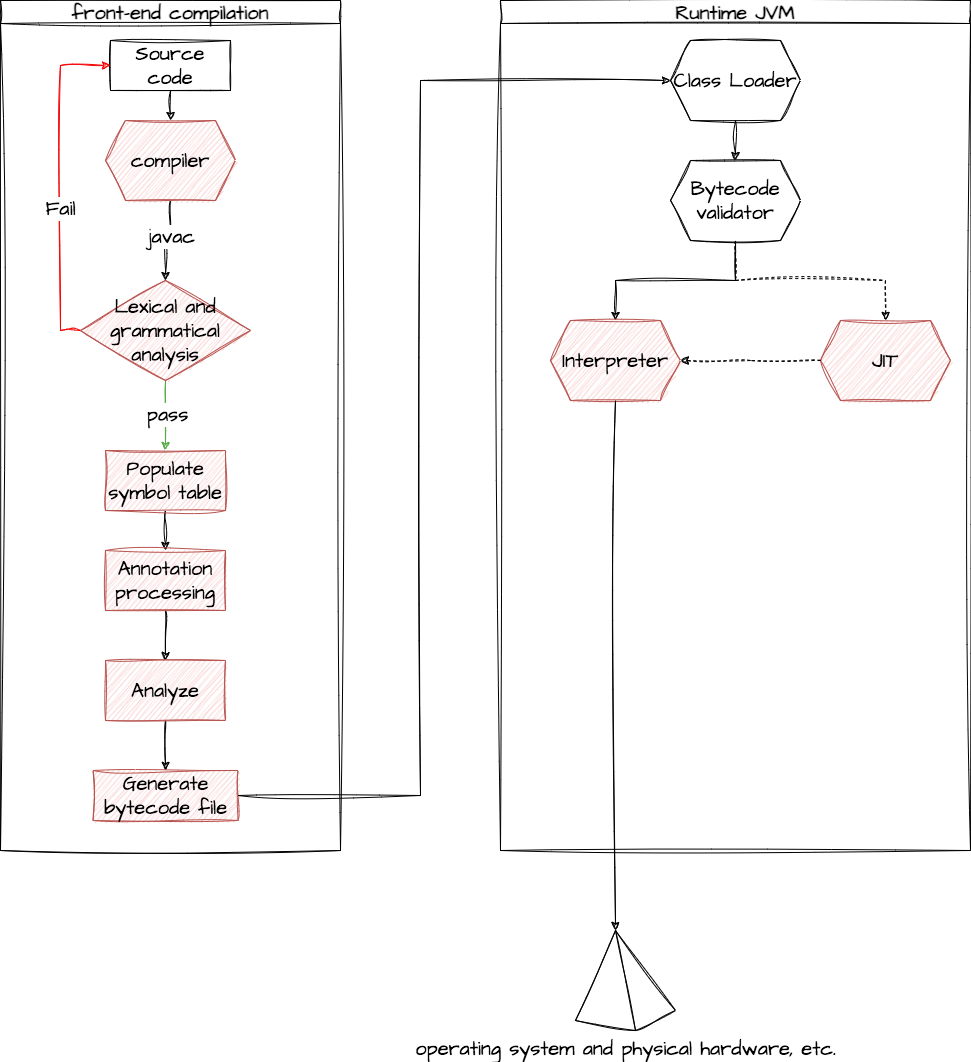

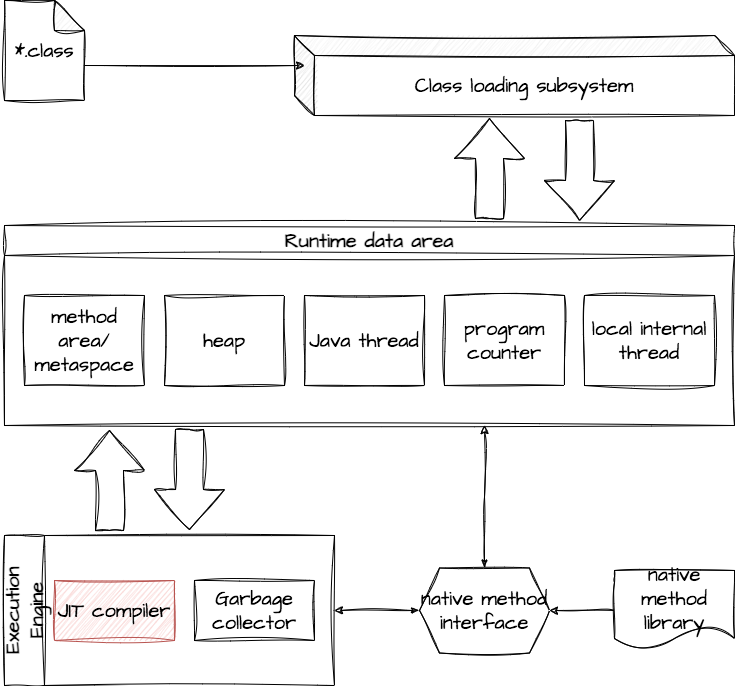

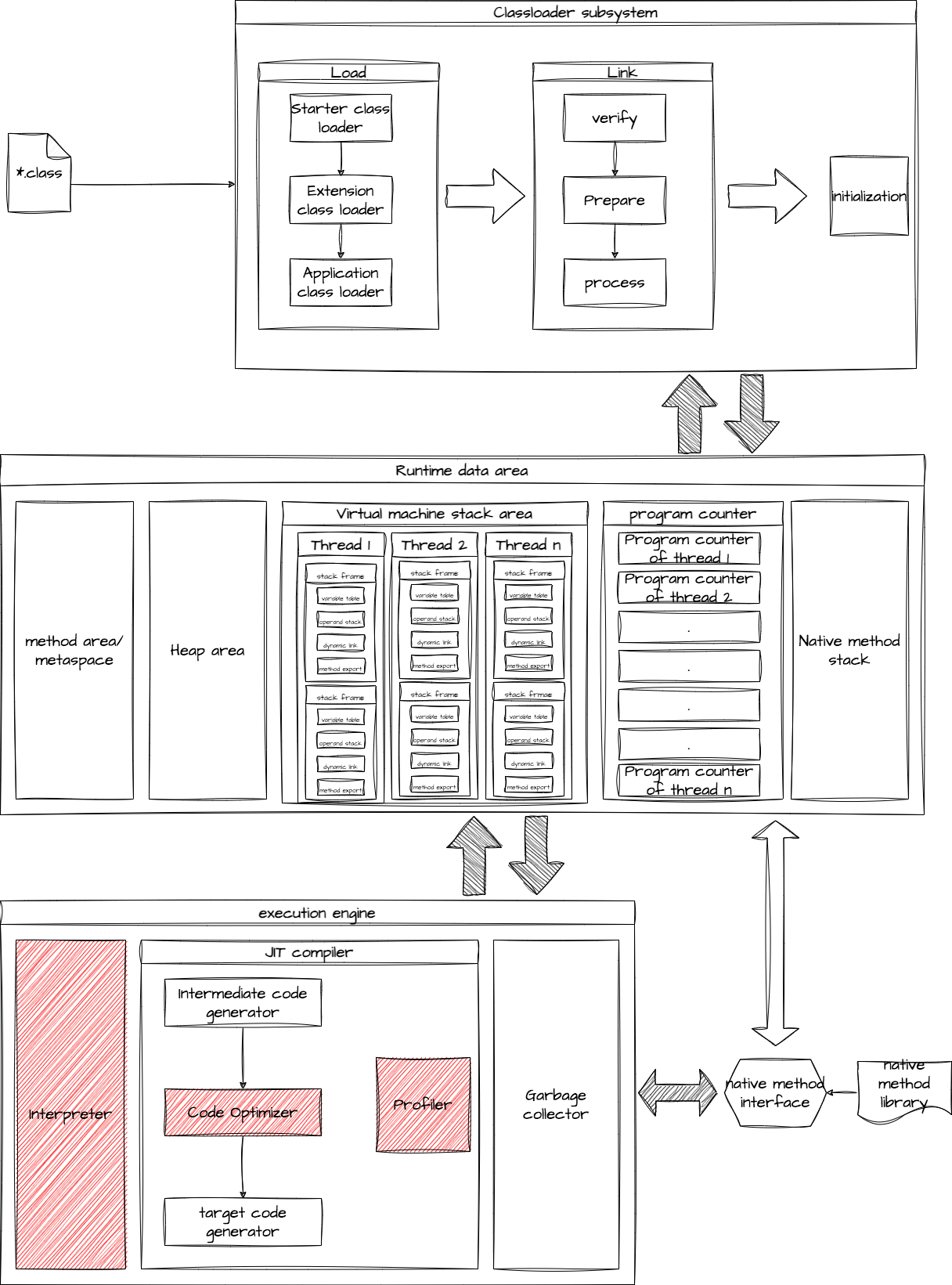

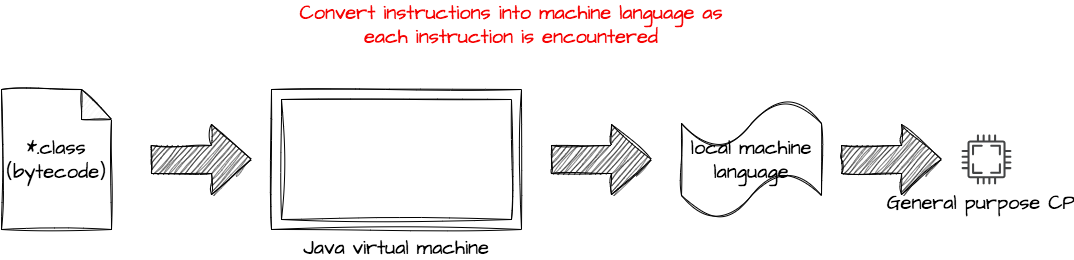

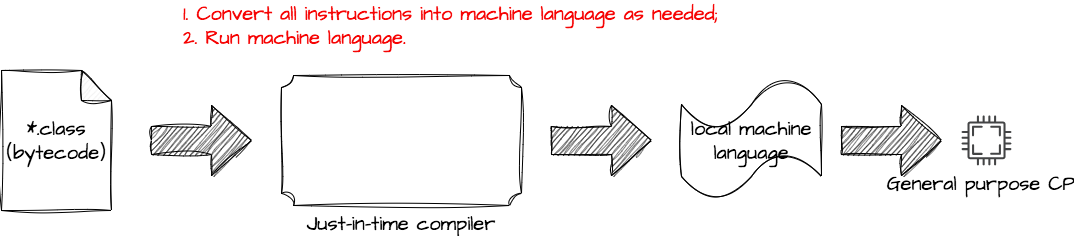

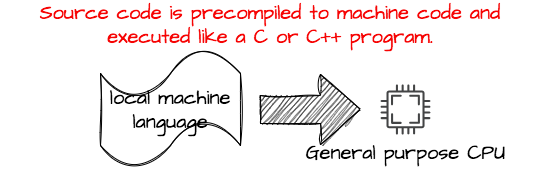

0.1.Java Program Execution Process

0.2.Compilation Is Fast Or Slow?

0.2.1.Java Virtual Machine (Interpreted is relatively slow)

0.2.2.Just In Time Compiler (relatively fast)

0.2.3.Java Compiler (relatively fast)

Eg: Graal compiler (can be used as an AOT compiler and can replace C2 in the JIT compiler), AOT (jaotc)

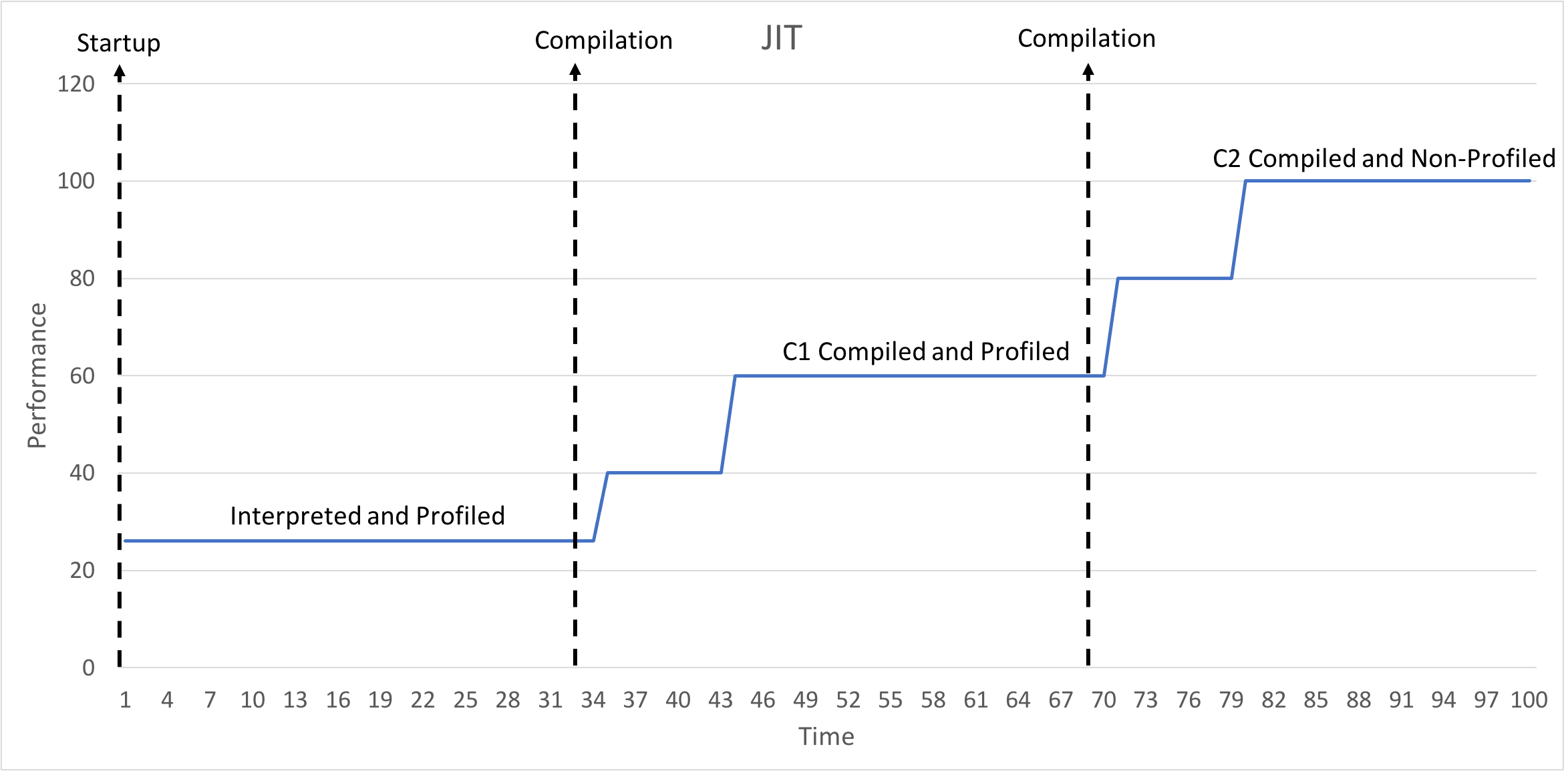

1.JIT Compilation Process

- After the Java application is started, after class loading and bytecode verification are completed, the JIT compiler will not be triggered immediately for compilation, but will be interpreted and analyzed by the real-time interpreter first;

- After the just-in-time interpreter completes the preliminary interpretation and analysis, the JIT compiler will use the analysis information that has been collected to find hot spots (frequently executed code parts). Once the hot spot codes are available, C1 can Conduct analysis and compile and generate relatively efficient target machine code based on the analysis results, so that the Java virtual machine at this time has the code performance of the native machine. At the same time, C1 will also perform further analysis;

- After C1 completes further analysis, C2 will use the analysis information generated by C1 to perform more aggressive and time-consuming optimization. At this time, C2 will recompile the code to generate more efficient target machine code, thereby making it more efficient. Significantly improve the code performance of Java virtual machine.

- In summary, based on more information about hotspots, C1 performance improves faster, while C2 performance improves better.

2.Current Needs

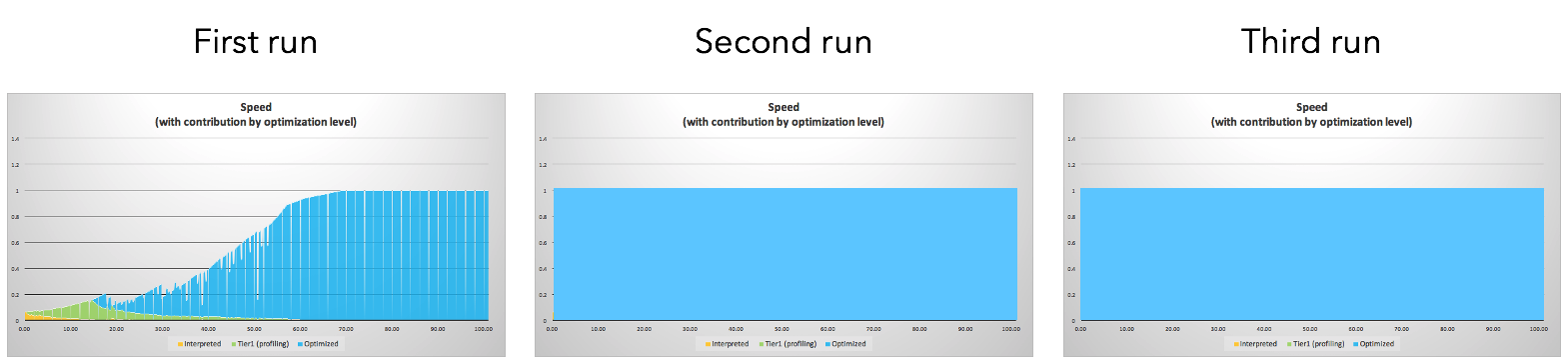

Azul can preheat in the simulation environment (simulate hotspot methods and loop bodies), then inject the results into the production environment, and compile directly using C2 to reduce the compilation time at runtime. This is very effective for scenarios such as securities industry quotations, because these scenarios require high-speed operation from the beginning.

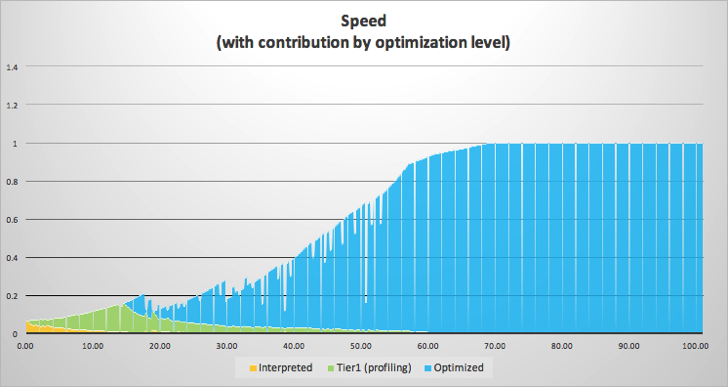

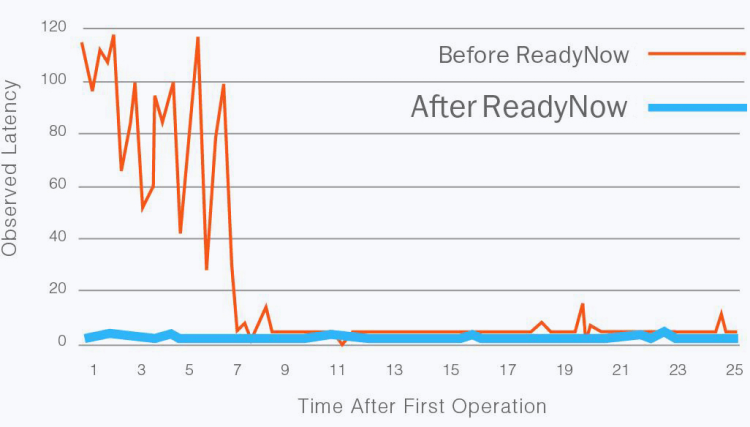

- Horizontal axis: the time when the JVM virtual machine reaches the best code performance;

- Vertical axis: JVM’s best code performance degree or ratio;

- Glitches: caused by de-optimization or garbage collection.

3.Question

Azul can learn and train in a simulation environment, and then input the training result set as a reference into the production environment to achieve the peak effect at startup. And eliminate GC glitches.

3.1.Current Issues

- Long startup time;

- It takes a long time for the Java Virtual Machine to reach the optimal code performance of the Java Virtual Machine.

3.2.Desired Results

- Short startup time;

- After startup, the Java virtual machine can quickly achieve optimal code performance.

3.3.Desired Goals

- On the premise of ensuring the correct use of key functions, significantly reduce the time it takes to restart;

- Eliminate glitches and enable the Java virtual machine to quickly achieve optimal code performance.

4.Problem Analysis

4.1.What are the current plans to accelerate the start-up?

4.1.1.CDS (Class Data Sharing)

Functional positioning: Dump internal classes, application classes, dynamic (classes loaded by custom class loader and omit the dump classlist step) and other representations into files (class loader, jsa file); Shared (CDS) on each JVM startup. Insufficient: No optimization or hotspot detection; Only class loading time is reduced; The startup speed is not significantly accelerated.

4.1.2.AOT (Ahead Of Time Compilation, compilation in advance, source code to machine code)

Advantage: “Full speed” from the start, GraalVM native images can do this; By definition, AOT is static, code is compiled before running, and there is no overhead in compiling code at runtime; Small memory footprint

Insufficient: Does not interpret bytecode; There is no hotspot analysis; There is no runtime compilation of code, so no runtime bytecode generation; Limited use of method inlining; Reflection is possible but complicated; Cannot use speculative optimization (assuming conditions hold, such as array bounds) Must be compiled for common characteristics (e.g. same name, same parameters, etc.) Because optimization is not thorough, overall performance is usually lower; Deploy the environment! = development environment.

4.1.3.JIT (Just In Time Compilation, instant compilation)

advantage: Aggressive method inlining can be used at runtime (for example, the number of method lines does not exceed 80 lines, aggressive refers to large methods, long compilation time, and large binary files) Can be generated using runtime bytecode Reflection is simple Can speculative optimization be used? (assuming conditions hold, such as array bounds) It can even be optimized for Haswell, Skylake, Ice Lake, and more. (CPU architecture) Overall performance is usually higher Deployment environment == development environment

insufficient: Takes more time to start (but will be faster) There is an overhead in compiling code at runtime Larger memory footprint

4.2.Why glitches occur (the existence of glitches directly affects the optimal performance of the Java virtual machine)?

4.2.1.De-optimization

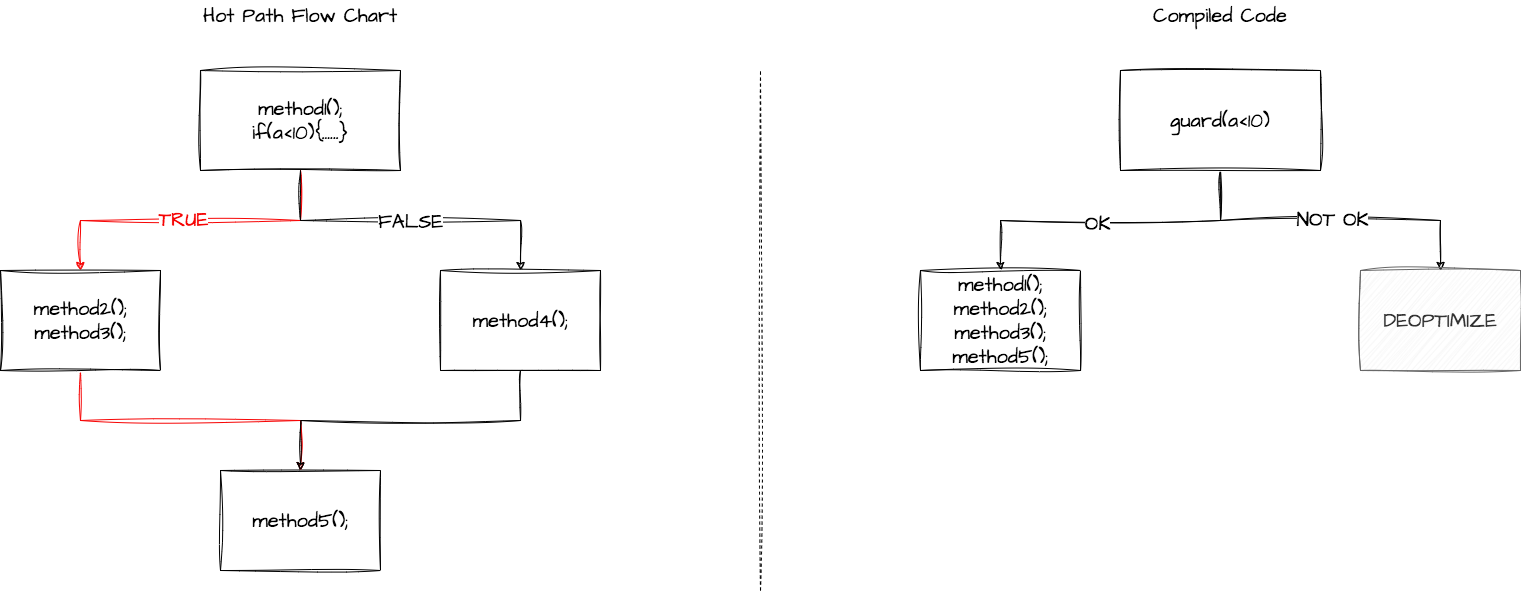

Although C2-compiled code is highly optimized and long-lived, it can also be de-optimized. As a result, the JVM will temporarily roll back to the interpreted state. De-optimization occurs when the compiler’s optimistic assumptions prove to be wrong - for example, when profile information does not match method behavior, the JVM de-optimizes compiled and inlined code as soon as the hot path changes .

4.2.2.There are GC operations before reaching optimal performance

5.Solutions

5.1.(Commercial fee)Azul Prime ReadyNow

5.1.1.What is Azul Prime ReadyNow?

ReadyNow is a feature of Azul Platform Prime that, when enabled, significantly improves application warm-up behavior.

5.1.2.What is preheating?

Warm-up refers to the time required for a Java application to reach optimal performance. The just-in-time (JIT) compiler’s job is to provide optimal performance by generating optimized compiled code from application bytecode. This process takes some time as the JIT compiler looks for optimization opportunities based on analysis of the application.

5.1.3.The basic idea

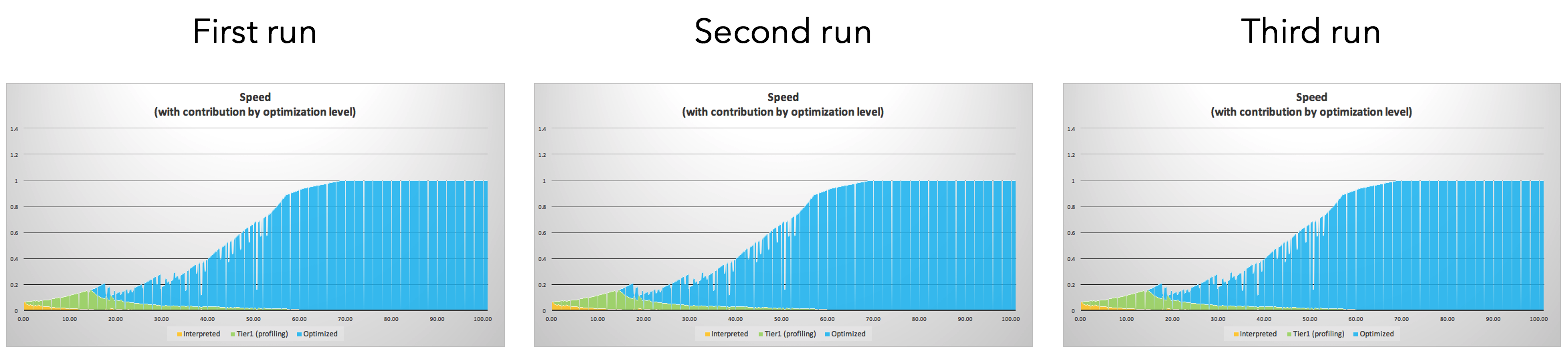

ReadyNow retains profiling information collected during an application’s run so that subsequent runs do not have to learn from scratch again. Warming up improves the operation of each application until peak performance is achieved.

5.1.4.Instructions

To enable ReadyNow, add the following command line options, which are usually the same for both: ● -XX:ProfileLogIn= instructs Azul Platform Prime to use information from the existing profile log. ● -XX:ProfileLogOut= records previous compilation and runtime de-optimization decisions. Running the application will automatically generate or update the profile log. This profile log will be used on subsequent runs of the application, improving warm-up.

5.1.5.Integrated development

Closed source

5.2.(Open Source Free)CRaC

5.2.1.What is CRaC?

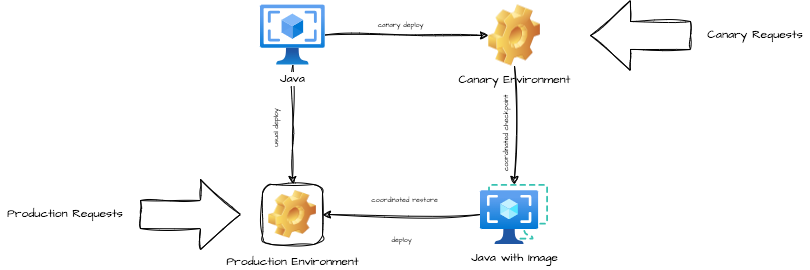

Referenced to CRIU (Checkpoint Restore In Userspace, checkpoint recovery in user space) The CRaC (Coordinated Restore at Checkpoint) project studies the coordination of Java programs and mechanisms for checkpointing (making mirrors and snapshots) of Java instances during execution. Restoring from an image can resolve some issues with boot and warm-up times. The main goal of this project is to develop a new mechanism-agnostic standard API to inform Java programs about checkpoint and recovery events. Other research activities will include, but are not limited to, integration with existing checkpoint/recovery mechanisms and the development of new mechanisms, changes to the JVM and JDK to shrink images and ensure they are correct.

5.2.2.The basic idea

Input data (simulated request) to a Java application running in a specific (canary) environment (CPU, memory, I/O, operating system, etc.). When the input data reaches saturation (the requested path covers all use case), freeze the running application, output the checkpoint of the Java virtual machine to achieve optimal performance as a snapshot file and save it. Later, the application can be started through the previously saved image file (theoretically it can be a different physical machine).

5.2.3.Deployment process

Initiate simulated requests to applications running in the canary environment, generate snapshot files when saturated requests are reached, and then restore the application through the snapshot files in the production environment.

5.2.4.Instructions

To enable CRaC, add the following command line options, which are usually the same for both: ● -XX:CRaCRestoreFrom=cr instructs the JDK to use the information in the existing configuration file log. ● -XX:CRaCCheckpointTo=cr records previous compilation and running de-optimization decisions.

5.2.5.Integrated development

5.2.5.1.Get source code

git clone https://github.com/openjdk/crac.git -b {tag}

5.2.5.2.Manual compilation

bash configure

make images

mv build/linux-x86_64-server-release/images/jdk/ .

5.2.5.3.Download the compatible CRIU

5.2.5.3.Extract and copy the criu binary on the same named file in the JDK

cp criu-dist/sbin/criu jdk/lib/criu

5.2.5.4.Execute authorization

sudo chown root:root jdk/lib/criu

sudo chmod u+s jdk/lib/criu

6.Verify

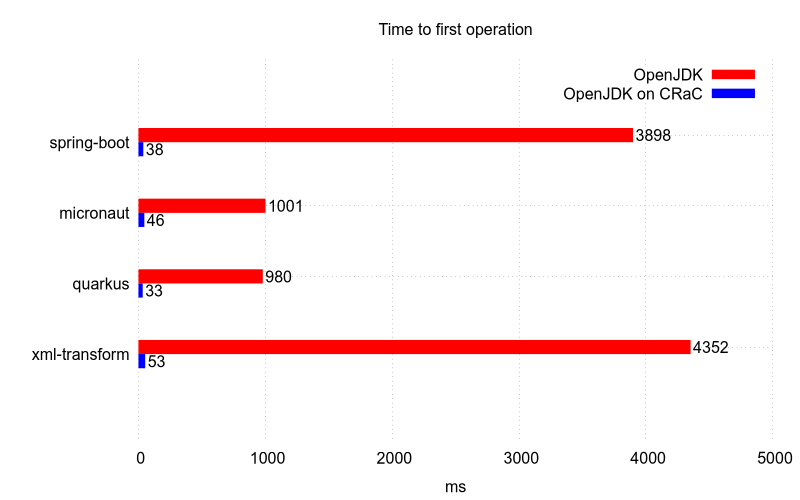

6.1.Official verification

6.1.1.Verification environment

Laptop (Intel i7-5500U, 16Gb RAM and SSD.) Operating system kernel (Linux kernel 5.7.4-arch1-1) Operating system (ubuntu:18.04 based image) Platform: archlinux

6.1.2.Verification scenario

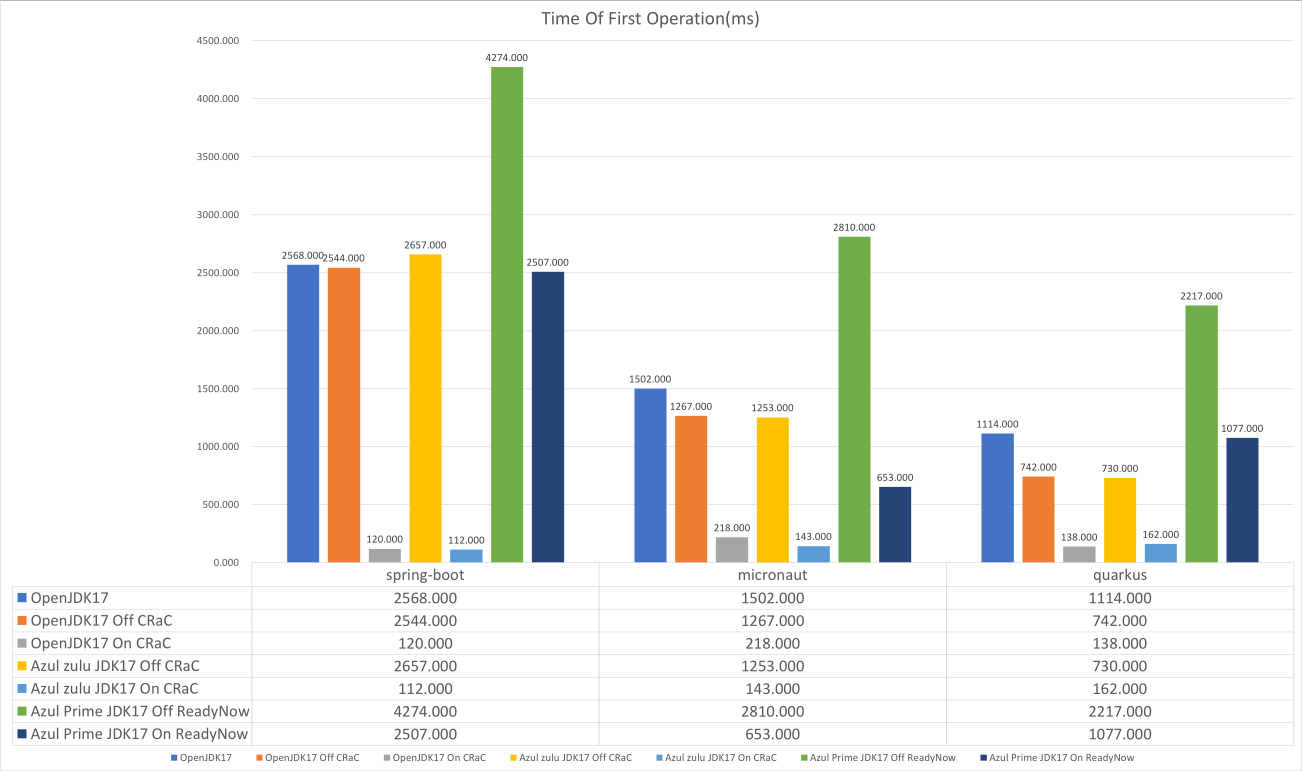

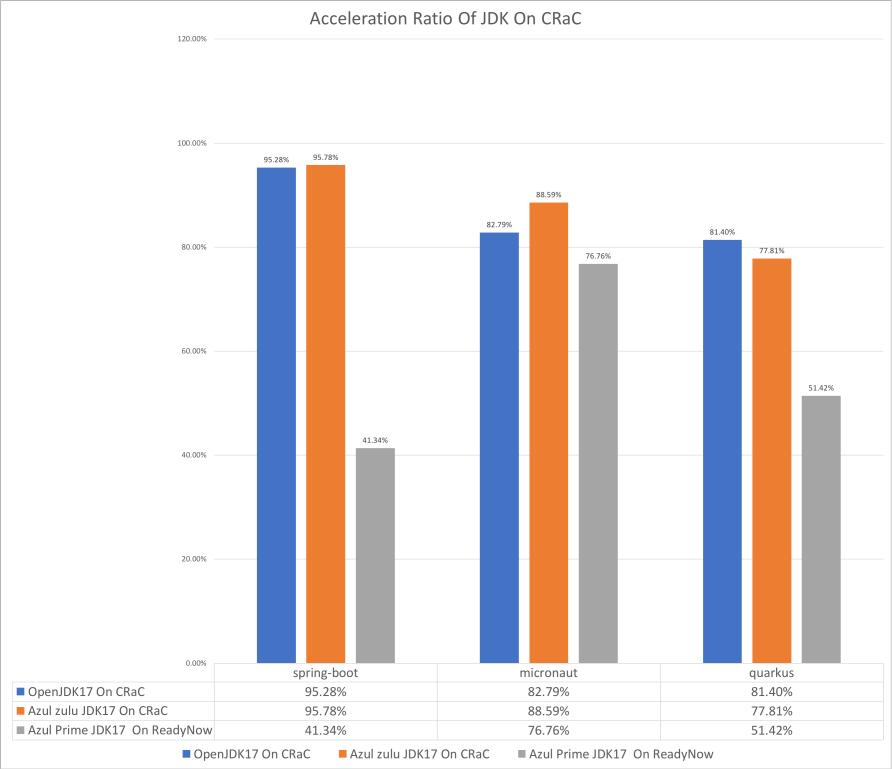

6.1.2.1.Scenario 1: Time To First Operation

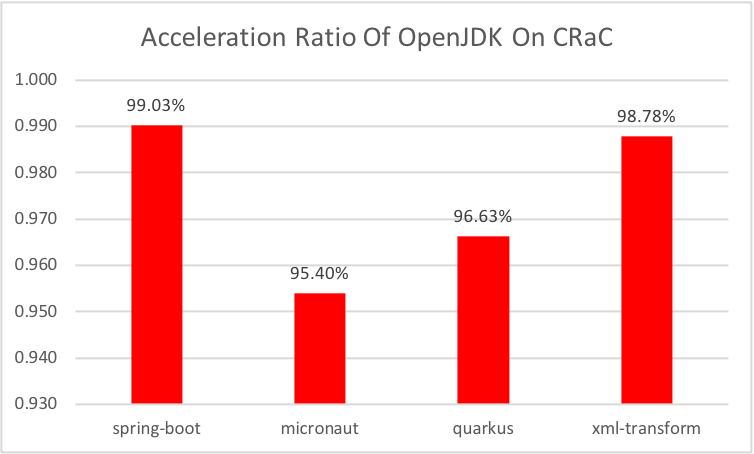

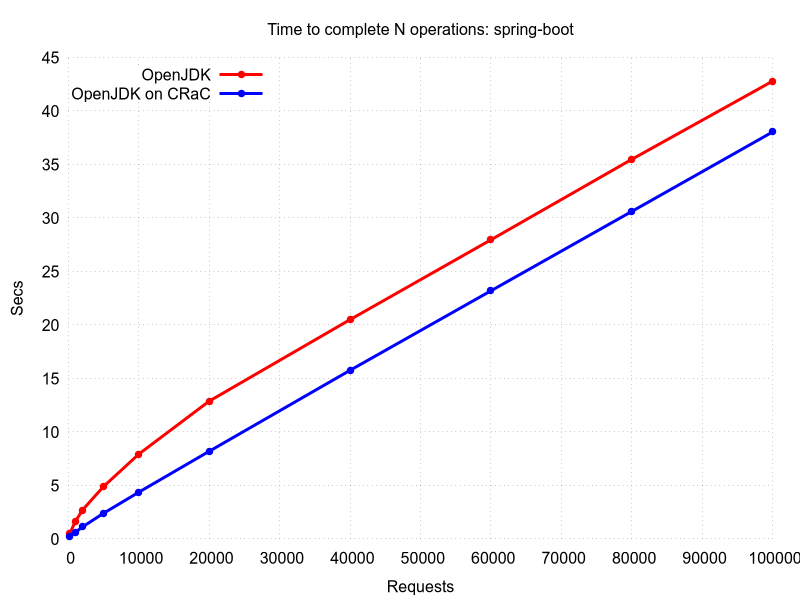

6.1.2.2.Scenario 2: Time to Complete N operations:sprint-boot(OpenJDK ON/OFF CRaC)

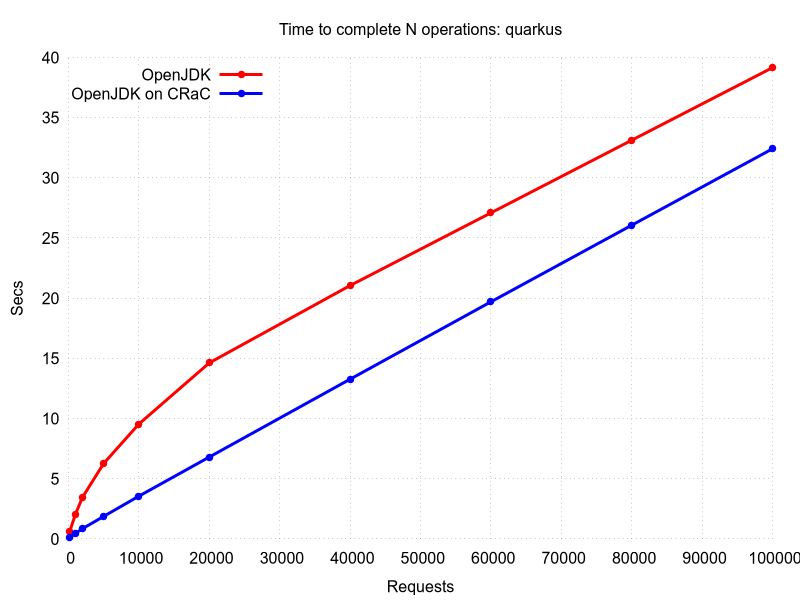

6.1.2.3.Scenario 3: Time to Complete N operations:quarkus(OpenJDK ON/OFF CRaC)

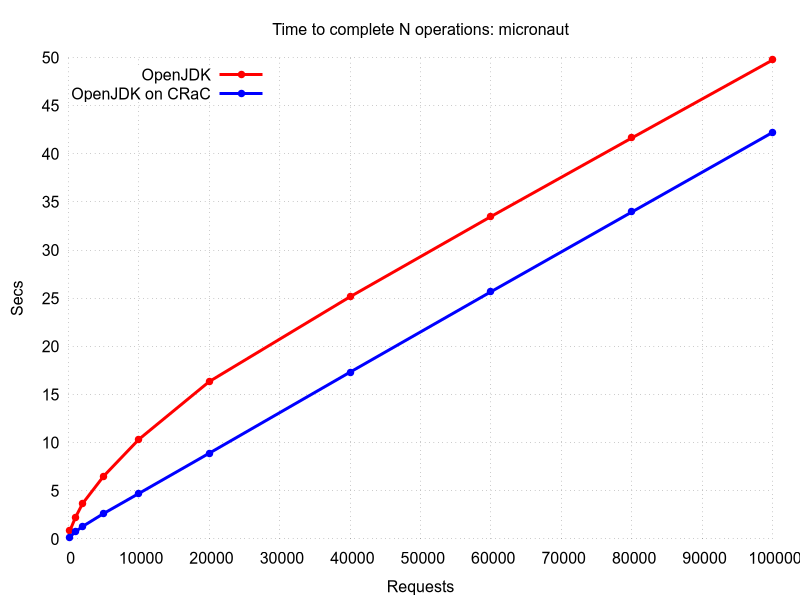

6.1.2.4.Scenario 4: Time to Complete N operations:micronaut(OpenJDK ON/OFF CRaC)

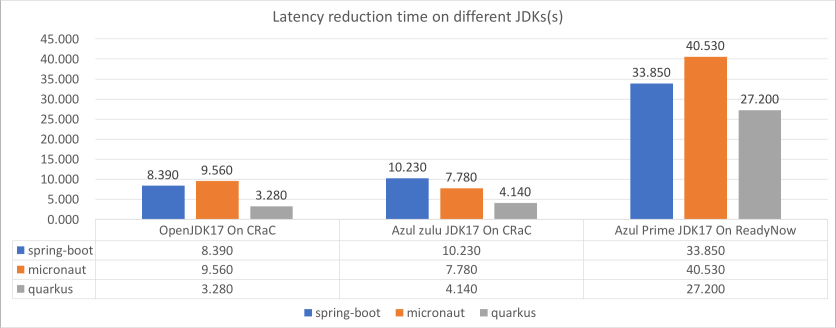

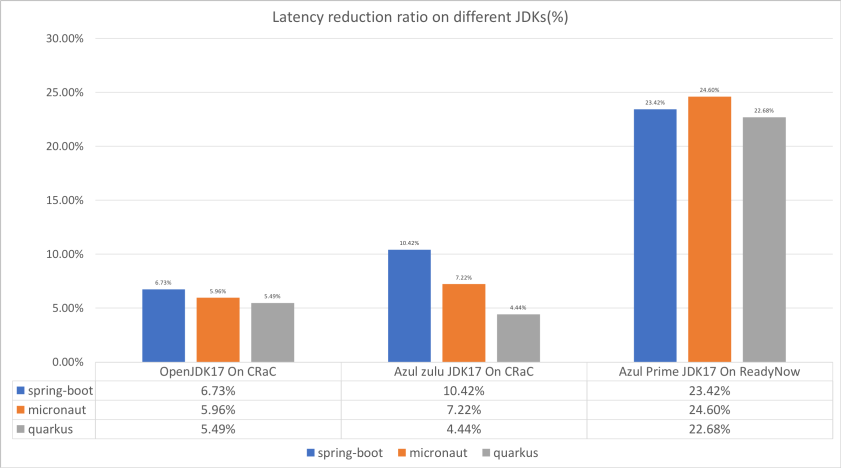

6.2.Local verification

6.2.1.Verification environment

Laptop (3.1 GHz Intel Core i5, 4Gb RAM and 50Gb SSD.) Operating system kernel (3.10.0-1160.102.1.el7.x86_64) Operating system (CentOS:7.9 VM) Platform: x86_64

6.2.2.Verification scenario

6.2.2.1.Scenario 1: Time Of First Operation

6.2.2.2.Scenario 2: Time To Complete 100000 Operations